More co-ordination is needed, and better access to data.

Did you know that the wildfires which ravaged Hawaii last summer were started by a secret “weather weapon” being tested by America’s armed forces, and that American ngos were spreading dengue fever in Africa? That Olena Zelenska, Ukraine’s first lady, went on a $1.1m shopping spree on Manhattan’s Fifth Avenue? Or that Narendra Modi, India’s prime minister, has been endorsed in a new song by Mahendra Kapoor, an Indian singer who died in 2008?

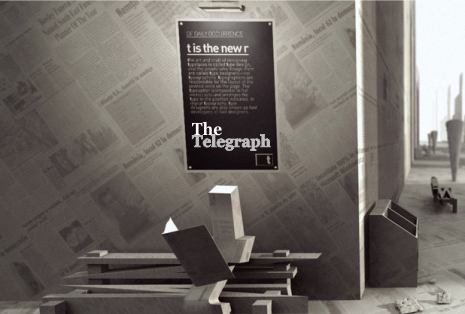

These stories are, of course, all bogus. They are examples of disinformation: falsehoods that are intended to deceive. Such tall tales are being spread around the world by increasingly sophisticated campaigns. Whizzy artificial-intelligence (ai) tools and intricate networks of social-media accounts are being used to make and share eerily convincing photos, video and audio, confusing fact with fiction. In a year when half the world is holding elections, this is fuelling fears that technology will make disinformation impossible to fight, fatally undermining democracy. How worried should you be?

Disinformation has existed for as long as there have been two sides to an argument. Rameses II did not win the battle of Kadesh in 1274bc. It was, at best, a draw; but you would never guess that from the monuments the pharaoh built in honour of his triumph. Julius Caesar’s account of the Gallic wars is as much political propaganda as historical narrative. The age of print was no better. During the English civil war of the 1640s, press controls collapsed, prompting much concern about “scurrilous and fictitious pamphlets”.

The internet has made the problem much worse. False information can be distributed at low cost on social media; ai also makes it cheap to produce. Much about disinformation is murky. But in a special Science & technology section, we trace the complex ways in which it is seeded and spread via networks of social-media accounts and websites. Russia’s campaign against Ms Zelenska, for instance, began as a video on YouTube, before passing through African fake-news websites and being boosted by other sites and social-media accounts. The result is a deceptive veneer of plausibility.

Spreader accounts build a following by posting about football or the British royal family, gaining trust before mixing in disinformation. Much of the research on disinformation tends to focus on a specific topic on a particular platform in a single language. But it turns out that most campaigns work in similar ways. The techniques used by Chinese disinformation operations to bad-mouth South Korean firms in the Middle East, for instance, look remarkably like those used in Russian-led efforts to spread untruths around Europe.

The goal of many operations is not necessarily to make you support one political party over another. Sometimes the aim is simply to pollute the public sphere, or sow distrust in media, governments, and the very idea that truth is knowable. Hence the Chinese fables about weather weapons in Hawaii, or Russia’s bid to conceal its role in shooting down a Malaysian airliner by promoting several competing narratives.

All this prompts concerns that technology, by making disinformation unbeatable, will threaten democracy itself. But there are ways to minimise and manage the problem.

Encouragingly, technology is as much a force for good as it is for evil. Although ai makes the production of disinformation much cheaper, it can also help with tracking and detection. Even as campaigns become more sophisticated, with each spreader account varying its language just enough to be plausible, ai models can detect narratives that seem similar. Other tools can spot dodgy videos by identifying faked audio, or by looking for signs of real heartbeats, as revealed by subtle variations in the skin colour of people’s foreheads.

Better co-ordination can help, too. In some ways the situation is analogous to climate science in the 1980s, when meteorologists, oceanographers and earth scientists could tell something was happening, but could each see only part of the picture. Only when they were brought together did the full extent of climate change become clear. Similarly, academic researchers, ngos, tech firms, media outlets and government agencies cannot tackle the problem of disinformation on their own. With co-ordination, they can share information and spot patterns, enabling tech firms to label, muzzle or remove deceptive content. For instance, Facebook’s parent, Meta, shut down a disinformation operation in Ukraine in late 2023 after receiving a tip-off from Google.

But deeper understanding also requires better access to data. In today’s world of algorithmic feeds, only tech companies can tell who is reading what. Under American law these firms are not obliged to share data with researchers. But Europe’s new Digital Services Act mandates data-sharing, and could be a template for other countries. Companies worried about sharing secret information could let researchers send in programs to be run, rather than sending out data for analysis.

Such co-ordination will be easier to pull off in some places than others. Taiwan, for instance, is considered the gold standard for dealing with disinformation campaigns. It helps that the country is small, trust in the government is high and the threat from a hostile foreign power is clear. Other countries have fewer resources and weaker trust in institutions. In America, alas, polarised politics means that co-ordinated attempts to combat disinformation have been depicted as evidence of a vast left-wing conspiracy to silence right-wing voices online.

One person’s fact…

The dangers of disinformation need to be taken seriously and studied closely. But bear in mind that they are still uncertain. So far there is little evidence that disinformation alone can sway the outcome of an election. For centuries there have been people who have peddled false information, and people who have wanted to believe them. Yet societies have usually found ways to cope. Disinformation may be taking on a new, more sophisticated shape today. But it has not yet revealed itself as an unprecedented and unassailable threat.